General Purpose GPU Programming is a huge interest of mine. Having multiple threads in a program is one thing, but having thousands to perform a complex mathematical computation, that's a whole different story.

With GPGPU programming we can do hundreds of calculations at the same time, rather then doing one at a time sequentially. Since modern GPUs have thousands of cores, we can put a calculation on each core and perform many calculations at once.

Friday, 12 April 2013

Showcasing our Game

The Level Up Showcase was on April 3rd, 2013.

Level Up is an annual event where students from all around the GTA showcase

their games.

Level Up was an experience to remember. It was held in the

Design Exchange in Downtown Toronto. We arrived at the location at 10 am and

stayed there until 11 pm. Needless to say, it was a long day. All throughout

the day, the place was packed. There were very few moments where someone wasn’t

playing our game, whether it was a fellow student, a visitor or an industry

professional.

Level Up was neat because we were able to see the games

produced by other students from other post-secondary institutions in the GTA.

We exchanged business cards with industry professionals and other students.

UOIT GameCon was on April 9th, 2013. GameCon is a UOIT event where all UOIT Game Dev. students come together to showcase their game. GameCon is awesome because we get to see what our fellow classmates were able to create over the semester.

Our game, A Case of the Mondays won an award for Player's Choice Best Second Year Gameplay. That was pretty cool, it is very rewarding to know people enjoyed playing our game.

Evolution of the Graphics in A Case of the Mondays

From the start we wanted to create a great looking and game.

Since we have three programmers on our team, I was able to focus on the

rendering and graphics programming while the other programmers focused on the game play. By splitting the work this way, we were able to achieve the best of both worlds.

Shadow mapping provides a subtle but welcome addition to the scene.

I wanted to make the game look as good as possible and in

order to do that, I had to use modern OpenGL 3.0 functionality. This included

VBOs, VAOs, shaders and use of our own matrix stack.

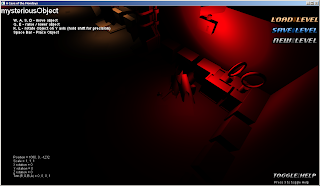

Here is a screenshot of the game as it appeared back in

December 2012.

Here he have basic per-vertex shader being performed by

depreated OpenGL lighting functions.

We then implemented basic shaders to perform per fragment

lighting. In the image below we have two per fragment spot lights which light

our scene.

As soon as first semester needed it became apparent to us

that we could make a pretty game at our experience level.

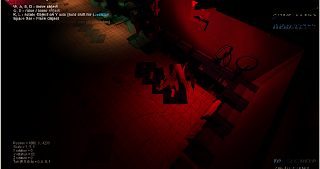

Here are some screenshots of the final build of the game.

We are using deferred shading to allow us to have dozens of lights on screen, we have written our own blending shader, shadow mapping and we apply a bright pass filter to make the lit ares of the game look brighter.

The images below compare shadow mapping vs no shadow mapping.

|

| Shadow mapping disabled |

|

| Shadow mapping enabled |

The difference between the first semester screenshots and

the second semester screenshots really make me proud to say that I worked on

this game. Graphically it looks like a pretty decent game, in my opinion.

Prototyping Composition in Photoshop

This blog post is more of a tutorial. We explore ways to

composite two rendering passes together. You could just keep jimmying values in

your shaders until you get your scene rendering how you like or you could save

some time and use a tool like Photoshop to prototype.

Prototyping your composition in Photoshop is mind blowingly

easy. First lets reflect what composition actually does, it takes two images

and outputs one image comprised of the two input images.

A traditional method of composition is using an additive

blend where you just take the color of the two input images and add them

together to get your composted image. The code looks like this:

|

| It's dead simple. |

Photoshop has a whole list of blending modes that can be

applied to layers. To prototype your composition in Photoshop all you need to

do is take screenshots of the two images you want to composite, put them in

their own layers in Photoshop and cycle through the blend options.

Above are the two images I want to composite together. On the left is my unlit scene and the right is my light.

Here I am applying a divide blend on my two images.

|

| Using different blend options to composite images can create a wide array of different effects. |

Here is a multiplicative blend.

|

| The multiplicative blend creates a very dimly lit scene. |

And here is a soft light blend, which is what we use in A

Case of the Mondays.

|

| The soft light blend creates a scene with a heavier light influence. |

By performing a simple Google search, you can find the mathematical formula to perform almost any blend. Here is the function for a soft light:

From this function, we can easily create a shader which implements it:

|

| It's literally one line of code. |

If you cycle through all the blend modes and you cannot

find one that you like, your free to make your own. You can play with the

opacity levels of the images, the hue, saturation etc. until you create the

look you are going for. Then to create your own blending equation, just look at

your history in Photoshop and you should be able to derive a shader that

performs the effect by looking up each of the functions for the effects you applied and throwing them into a shader.

Blending in OpenGL

OpenGL has a wide array of different blend functions that it

will do for you with the change of a state.

However getting your scene to blend

nicely can be tricky, especially when you have multiple passes you want to

composite together and having transparent objects just makes blending even

trickier.

The OpenGL wiki has a fantastic page about all of the

different blending options in OpenGL so I won’t spend a lot of time restating what that very

descriptive wiki page already states.

The basic idea of blending is: if you are

drawing an object on top of another, how do the overlapping fragments behave.

If you have an opaque

object with depth testing enabled, the fragments that belong to the object that

is closer to the camera will be drawn with full opacity. Simple enough but if

you have a transparent object in front of an object, you need to take the alpha

channel of the fragment into considering. Fortunately there are built in OpenGL

blend functions that do this for you. However it is up to you to draw the

objects in the correct order (transparent objects are drawn last).

Here is a demonstration of the light accumulation pass in A

Case of the Mondays using a couple of

different blending modes.

Above is a screenshot of the light accumulation using the following blending states:

glBlendFunc (GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

Here is the same screenshot using different blending options:

glBlendEquation(GL_FUNC_ADD);

glBlendFunc(GL_ONE, GL_ONE);

Notice that the two images look very different and all I did was change a few OpenGL states. The first image is a lot darker than the second. In the game we use the second option simply because the light has more of a presence and therefore influence in the scene.

Subscribe to:

Comments (Atom)